Learning + Control Theory

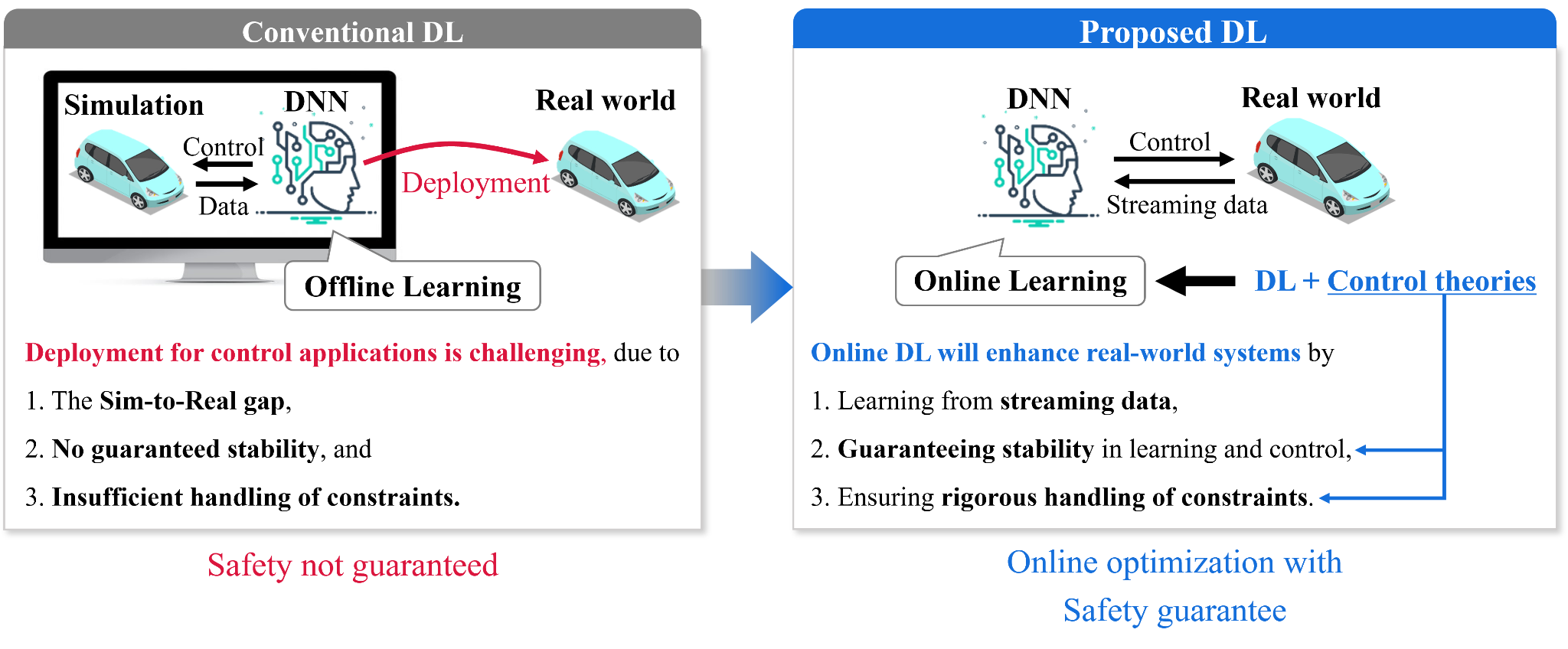

We strive to harness the synergy between learning and control theory to maximize their potential. In doing so, we address the limitations of conventional deep learning (DL) in control applications. Despite the remarkable progress in DL, its adoption in control remains constrained by three major challenges: the sim-to-real gap, the lack of guaranteed stability, and the difficulty in handling constraints. These limitations prevent conventional DL from ensuring safety in real-world applications. To overcome these challenges, we propose a DL framework that enables deep neural networks (DNNs) to continuously learn from streaming data collected directly from real-world systems. By integrating control theory, this approach guarantees stability and ensures rigorous constraint handling. With these three key properties, the proposed DL framework enables online optimization with safety guarantees, making it a viable solution for real-world control applications.

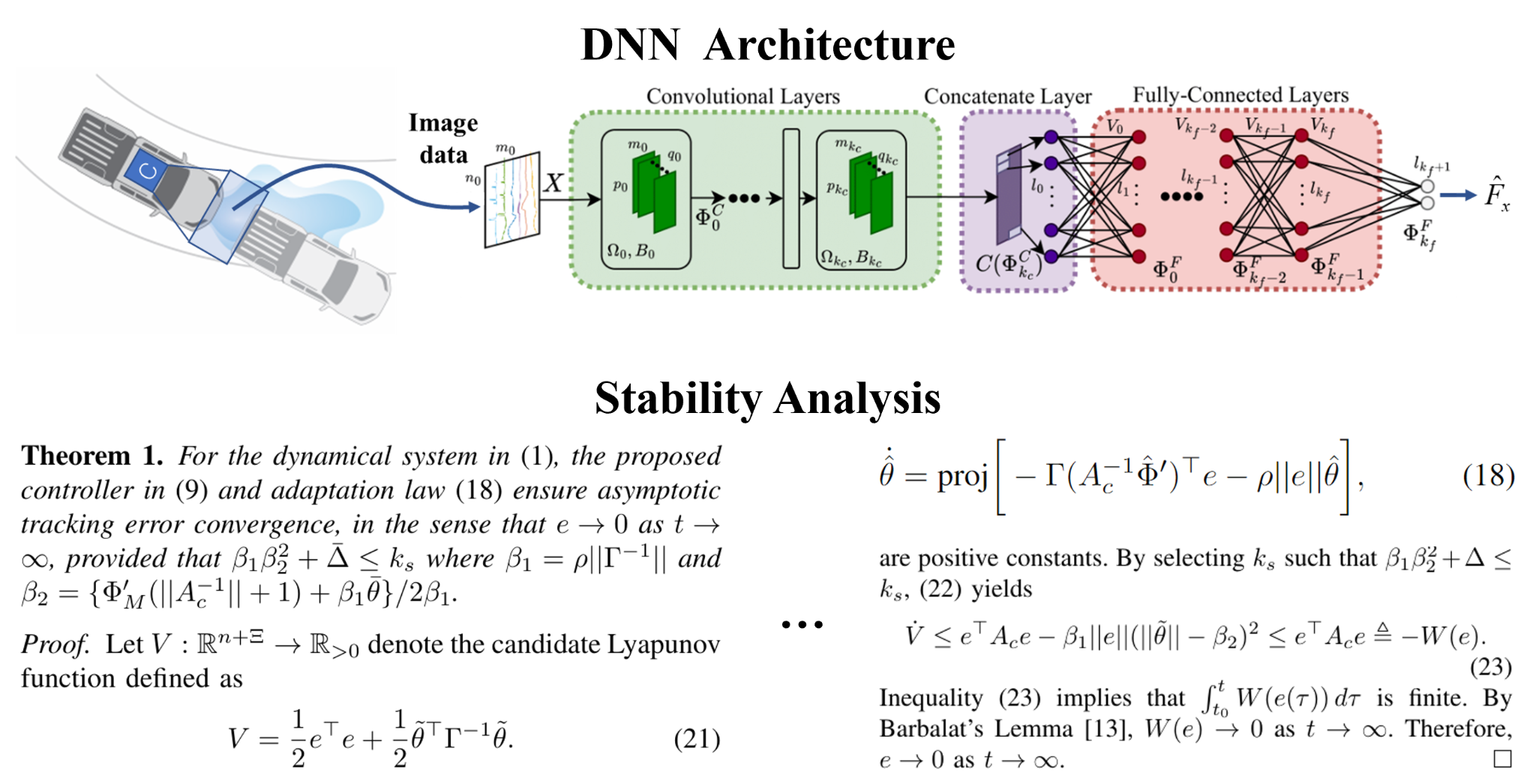

Below is a preliminary result of the proposed DL-based control approach [3]. This DNN is designed to learn the optimal control law for autonomous driving in real time, without relying on any prior information. The stability of both the learning and control processes has been theoretically proven. In a vehicle simulation, the DNN successfully learned the optimal control law online while maintaining stability, even without prior knowledge. These results strongly validate the feasibility of the proposed online DL approach for real-world control applications.

Relevant Work

[1] M. Ryu, D. Hong, and K. Choi*, “Constrained Optimization-Based Neuro-Adaptive Control (CoNAC) for Uncertain Euler-Lagrange Systems Under Weight and Input Constraints,” Under review for IEEE Transactions on Neural Networks and Learning Systems.

[2] M. Ryu, J. Kim, and K. Choi*, “Imposing Weight Norm Constraint for Neuro-Adaptive Control,” Under review for European Control Conferences 2025.

[3] M. Ryu and K. Choi*, “CNN-based Adaptive Controller with Stability Guarantees,” Under review for L4DC 2025.

[4] S. Jang, M. Ryu, and K. Choi*, “Physics-Informed Online Learning of Flux Linkage Model for Synchronous Machines,” TechRxiv, Preprint, 172893538.89561848/v1. Link

[5] K. Choi, H. Lee, and W. Kim*, “Using Deep Reinforcement Learning for Dynamic Gain Adjustment of a Disturbance Observer,” TechRxiv, Preprint, 171174527.70147690/v1. Link

[6] Y. Jeong, S. Jang, and K. Choi*, “Neural Network-based Nonlinearity Estimation of Voltage Source Inverter for Synchronous Machine Drives,” in 2024 IEEE 33rd International Symposium on Industrial Electronics (ISIE), 2024: IEEE. Link