A Comparative Study of Reinforcement Learning and Analytical Methods for Optimal Control

- Abstract

- Additional Comments

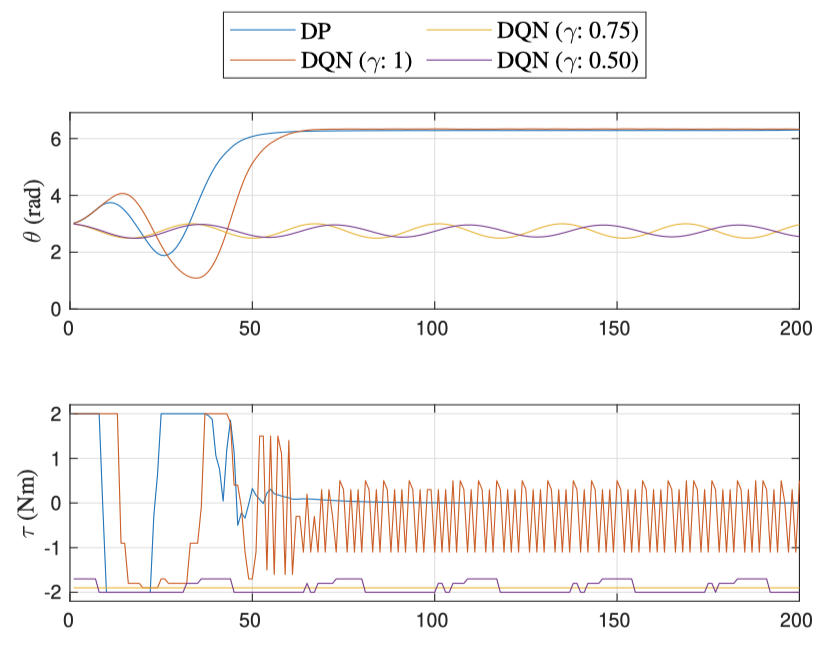

Numerous reinforcement learning (RL) algorithms have been introduced to resolve challenging tasks like game playing, natural language processing, and control. Particularly, RL can be used to find a good policy for control systems for which the optimal control sequence is difficult to find by analytical methods. This paper compares RL and analytical methods for optimal control in an inverted pendulum environment. Dynamic programming (DP) and model predictive control (MPC) are considered for the analytical methods. The control results of RL, DP, and MPC are qualitatively and quantitatively compared in terms of total reward, state response, and control sequence to investigate the relationships between them. Because they have similar problem formulations, the relationships can be explained by RL parameters: discounting factor and exploration rate. This comparative study is expected to provide insights to those studying RL algorithms and optimal control theories.