Online Reinforcement Learning for Optimal Tracking in Servo Positioning Systems

온라인 강화학습 기반 서보 시스템의 최적 각도 추종 제어

- Abstract

- Additional Comments

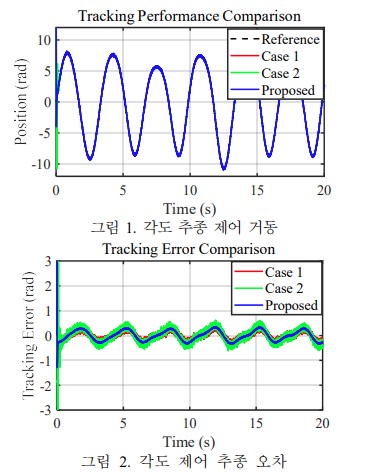

This paper proposes an online reinforcement learning-based optimal tracking control method for output feedback servo systems under unknown disturbances. The system is reformulated in a control-affine form with uncertainties represented as a lumped nonlinear function. An online identifying filter estimates unknown dynamics in real time, while an actor-critic neural network approximates the value function and optimal control policy. The method yields an approximate Hamilton–Jacobi–Bellman (HJB) solution, with convergence ensured via adaptive learning laws. Simulation results demonstrate robust tracking performance under time-varying disturbances.